I purchased a Whole Genome Sequencing (WGS) test from @DanteLabs for $399 USD in August 2018. My Variant Call Format (VCF) files were ready for me to download in January 2019, which I dissected in a blog post in February: My Whole Genome Sequencing. The VCF File.

My 1 TB hard disk containing my raw data arrived in April 2019. It included my raw reads (FASTQ files) and assembled Ch37 genome (BAM file) in April 20

I was originally intending to then analyze my raw reads and BAM (Binary Sequence Alignment Map) file, but at that time in April, Dante had a deep discount on their Long Reads WGS test that I purchased for $799 USD. So I figured I’d wait until I got the long read results and then analyze and compare both the short read and long read tests together. That would prove interesting and show the strengths and weaknesses of each test and maybe they can work together for improved results.

The FASTQ file

I got my long reads results from Dante in October. They provided only the FASTQ file and provided it online as a single downloadable file.

The file was 199 GB (yes, that’s GB). On my internet connection, it took 12 hours to download. It is a compressed file. I unzipped it to look at it. It took 78 minutes to decompress to 243 GB. It’s a good thing I still had half of my 2 TB internal hard drive free to use.

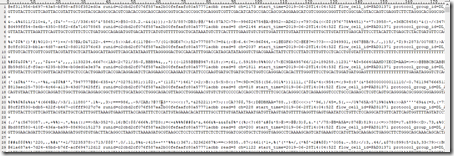

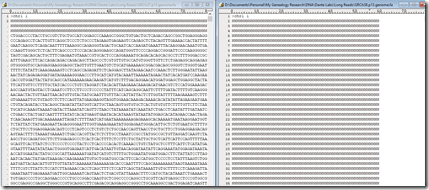

This is what the beginning of my decompressed FASTQ file looks like. Four lines are used per sequence, containing: (1) an identifier and description, (2) the raw sequence letters, (3) a “+” character, and (4) the quality values for each letter in the sequence.

The lines extend further to the right than shown above. The 7 sequences here have 288, 476, 438, 302, 353, 494 and 626 bases. These are some of the shorter sequences in the file. If I go to the 1321st sequence in the file, it contains 6411 bases.

But even that is likely short compared to what some of the longest reads must be. This file is promised to have an N50 > 20,000 bases. That is not an average length, but that means that if you total the lengths of all the sequences that are more than 20,000 bases, then they will make up more than 50% of all the bases. In other words, the N50 is like a length-weighted median.

By comparison, taking a look at my short read FASTQ files, I see that every single sequence is exactly 100 bases. That could be what Dante’s short read Illumina equipment is supposed to produce, or it could have been (i hope not) processed in some way already.

The Alignment Procedure

The FASTQ file only contains the raw reads. These need to be mapped to a standard human genome so that they can be aligned with each other. That should result in an average of 30 reads per base over the genome. That’s what a 30x coverage test means, and both my short read and long read tests were 30x coverage. The aligned results are put into a BAM file which is a binary version of a SAM (Sequence Alignment Map) file.

Dante produced and supplied me with my BAM file for my short reads. But I just learned that they only provide the FASTQ file with the long read WGS test. That means, I have to do the alignment myself to produce the BAM file.

On Facebook, there is a Dante Labs Customers private group that I am a member of. One of the files in the file area are instructions for “FASTQ(s) –> BAM” created by Sotiris Zampras on Oct 22. He gives 5 “easy” steps:

- If using Windows, download a Linux terminal.

- Open terminal and concatenate the FASTQ files.

- Download a reference genome

- Make an index file for the reference genome.

- Align the FASTQs and make a BAM file.

Step 1 - Download a Linux Terminal

Linux is an open-source operating system released by Linus Torvalds in 1991. I have been a Windows-based programmer ever since Windows 3.0 came out in 1990. Prior to that, I did mainframe programming.

I have never used Linux. Linux is a Unix-like operating system.  I did do programming for 2 years on Apollo Computers between 1986 and 1988. Apollo had their own Unix-like operating system called Aegis. It was a multi-tasking OS and was wonderful to work with at a time DOS was still being used on PCs.

I did do programming for 2 years on Apollo Computers between 1986 and 1988. Apollo had their own Unix-like operating system called Aegis. It was a multi-tasking OS and was wonderful to work with at a time DOS was still being used on PCs.

So now, I’m going to plunge in head first and download a Linux Terminal. Sotiris recommended the Ubuntu system and provided a link to the Ubuntu app in the Windows store. It required just one Windows setting change: to turn on the Windows Subsystem for Linux.

Then I installed Ubuntu. It worked out of the box. No fuss, no muss. I have used virtual machines before, so that I could run older Windows OS’s under a newer one for testing my software. But this Ubuntu was so much cleaner and easier. Microsoft had done a lot in the past couple of years to ensure that Windows 10 will run Linux smoothly.

I had to look up online to see what the basic Ubuntu commands were. I really only needed two:

- List files in current directory: ls

List files with details: ls –l - Change current directory: cd folder

The top directory named “mnt” contains the mounted drives.

Step 2 – Concatenate the FASTQ files

My short read FASTQ files were a bunch of files, but my long read file is just one big file, so nothing needed for it.

Step 3 – Download a Reference Genome.

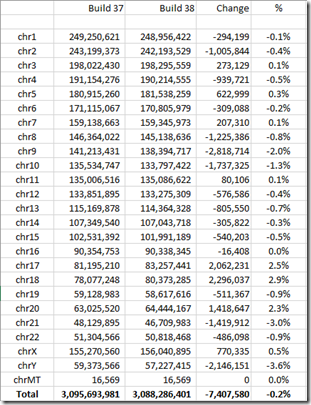

Sotiris gave links to the human reference files for GRCh37.p13 and GRCh38.p13.

For medical purposes, you should use the most recent version, which currently is: March 2019 GRCh38.p13 (aka Build 38, or hg 38). But I’m doing this to compare my results to my raw data from other DNA testing companies. Those are all June 2013 GRCh37.p13 (aka Build 37, or hg 19).

So I’m going to use Build 37.

The two reference genome files are each about 800 MB in size. They are both compressed, and after unzipping, they are both about 3 GB.

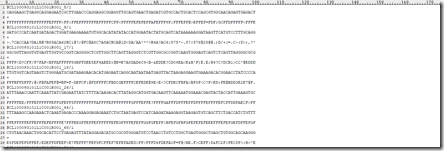

The files are in FASTA format. They list the bases of each chromosome in order. This is what they look like:

Both files denote the beginning of each chromosome with a line starting with the “>” character. That is followed simply by the sequence of bases in that chromosome. In Build 37, each chromosome is one long line, but my text editor folds each line after 4096 characters for displaying. In Build 38 they are in 60 character groups with each group on a new line.

The lines contain only 5 possible values: A, G, C, T or N, where N represents Nucleic acid, meaning any of A, G, C or T. There are usually blocks of N, especially at the beginning and end of each chromosome as you can see above.

Each genome also includes references for chromosomes X, Y, and MT.

Those are followed by a good number of named patches, e.g. GL877870.2 HG1007_PATCH in Build 37 which contains 66021 bases.

Here are the number of bases for the two builds by chromosome:

You’ll notice the number of bases in the reference genome is different in the two Builds by as much as 3.6%. A good article explaining the differences between GRCh37 and GRCh38 is Getting to Know the New Reference Genome Assembly, by Aaron Krol 2014.

Just for fun, I compared the mt Chromosome which has the same number of bases in the two Builds. All bases also have the same value in both Builds. The count of each value is:

- 5124 A

- 2169 G

- 5181 C

- 4094 T

- 1 N

The one N value is at position 3107.

Step 4 – Make an Index File for the Reference Genome

Sotiris didn’t mention this in his instructions, but there were two tools I would have to install. The Burrows-Wheeler Aligner (BWA) and SAMtools.

I was expecting that I’d need to download and do some complex installation into Ubuntu. But no. Ubuntu already knew what BWA and SAMtools were. All I had to do was execute these two commands in Ubuntu to install them:

sudo apt install bwa

sudo apt install samtools

Again. No fuss. No muss. I’m beginning to really like this.

In fact, Ubuntu has a ginormous searchable package library that has numerous genomics programs in it, including samtools, igv, minimap, abyss, ray, sga, canu, and all are available by that simple single line install.

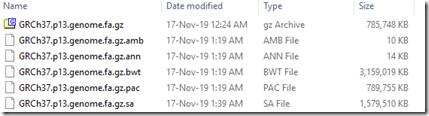

The command to index the Reference Genome was this:

bwa index GRCh37.p13.genome.fa.gz

It displayed step-by-step progress as it ran. It completed in 70 minutes after processing 6,460,619,698 characters. The original file and the 5 index files produced were:

Step 5 – Align the FASTQs and Make a BAM File

The BWA program has three algorithms. BWA-MEM is the newest and is usually the preferred algorithm. It is said to be faster and more accurate and has long-read support. BWA-MEM also tolerates more errors given longer alignments. It is expected to work well given 2% error for an 100bp alignment, 3% error for a 200bp, 5% for 500bp and 10% for 1000bp or longer alignment. Long reads are known to have much higher error rates than short reads, so this is important.

The command for doing the alignment is:

bwa mem -t 4 GRCh37.p13.genome.fa.gz MyFile.fastq.gz | samtools sort -@4 -o FinalBAM.bam

So the program takes my FASTQ file and aligns it to GRCh37. It then pipes (that’s the “|” character) the output to samtools which then creates my resulting BAM file.

I have a fairly powerful Intel i7 four-core processor with 12 GB RAM. The –t 4 and –@4 parameters are telling the program to use 4 threads. Still, I knew this was going to take a long time.

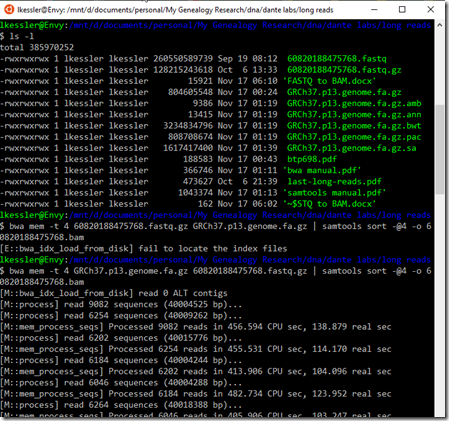

Here’s what I did to start the program:

First I used the ls –l command to list the files in the folder.

Then I ran the command. By mistake, I had the reference genome and my FASTQ in the wrong order and it game me a “fail to locate the index files”. Once I figured out what I did wrong, I ran it correctly.

The display showed the progress every 40 million bp that was processed. That seemed to average about 6200 sequences indicating that, at least to start, the there was an average of about 6450 bases per sequence. Working out the amount of time per base, I can extrapolate the total time needed to being about 102 hours, or a little over 4 days. That’s do-able, so I let it go and was interested to see if it would speed up, slow down, or end up completing when I predicted.

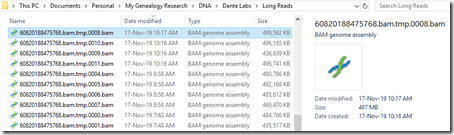

Slowly temporary files were being created:

Every 75 minutes or so, 4 new temporary files (likely for the 4 threads) of about 500 MB each were being created. Don’t you like the icon Windows uses for BAM files? It uses that icon for FASTQ and FASTA files as well.

Now I just had to wait.

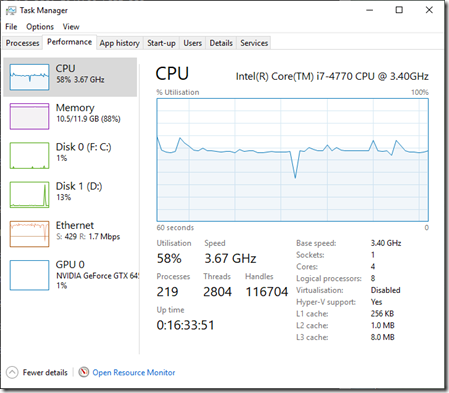

What amazed me while I was waiting was how well Windows 10 on my computer handled all this background processing. I could still do anything I wanted, even watching YouTube videos, with hardly any noticeable delay. So I took a look at the Task Manager to see why.

Even though 10.5 of my 12 GB RAM was being used, only 58% of the CPU was taken. I’m thinking that the 4 thread setting I used for BWA was easily being handled because of the 8 Logical processors of my CPU.

What also impressed me was that my computer was not running hot. Its cooling fan was dispersing air that was at the same temperature I usually experience. My worry before I started this was that days of 100% processing might stress my computer to it’s limits. Fortunately, it seems that’s not going to be a problem.

Wouldn’t You Know It

While I was waiting for the run to complete, I thought I’d look to see what Dante used to align my short read WGS test. For that test they provided me with not just the FASTQ raw read files, but also some of the processed files, including the BAM and VCF files.

I unzipped my 110 GB BAM file, which took 3 hours to give me a 392 GB text file that I could read. I looked at the first 200 lines and I could see that Dante had used BWA-MEM version 0.7.15 to produce the BAM file.

I thought I’d go to BWA’s github repository to see if that was the most recent version. It’s pretty close. 0.7.17 was released Oct 23, 2017. The 0.7.15 version is from May 31, 2016 and the changes weren’t significant.

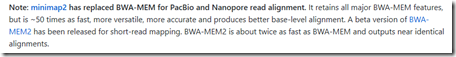

But while there, I was surprised to see this notice:

Seems that minimap2 is now recommended instead of BWA-MEM. Here’s what they say in the minmap2 Users’ Guide:

Minimap2 is a versatile sequence alignment program that aligns DNA or mRNA sequences against a large reference database. Typical use cases include: (1) mapping PacBio or Oxford Nanopore genomic reads to the human genome; (2) finding overlaps between long reads with error rate up to ~15%; (3) splice-aware alignment of PacBio Iso-Seq or Nanopore cDNA or Direct RNA reads against a reference genome; (4) aligning Illumina single- or paired-end reads; (5) assembly-to-assembly alignment; (6) full-genome alignment between two closely related species with divergence below ~15%.

For ~10kb noisy reads sequences, minimap2 is tens of times faster than mainstream long-read mappers such as BLASR, BWA-MEM, NGMLR and GMAP. It is more accurate on simulated long reads and produces biologically meaningful alignment ready for downstream analyses. For >100bp Illumina short reads, minimap2 is three times as fast as BWA-MEM and Bowtie2, and as accurate on simulated data. Detailed evaluations are available from the minimap2 paper or the preprint.

Oh well. It looks like I’ll let the BWA-MEM run finish, and then try running minimap2.

BWA Finally Done

The BWA-MEM program finally completed 5 days and 12 hours later. That’s 132 hours, a bit more than the 102 hours I had estimated. It would have been nice for the progress to be shown with a percentage completed, as I wasn’t entirely sure at any time how much more there was remaining to do. In the end, BWA created 328 temporary BAM files totaling 147 GB.

Following this, BWA reported it had spent 480,409 seconds of real time (133.4 hours) and 1,638,167 of cpu time, a ratio of 3.4 representing the gain it got from using 4 threads.

Then BWA passed off to samtools for the assembly of the final BAM file. There was about an hour of nothing visible happening. Then samtools started creating the BAM file. Windows Explorer showed its progress, with the size of the BAM file being created growing by about 12 KB every second. This took another 3.5 hours and the result was a single BAM file of 145 GB (152,828,327 KB).

Minimap2

After reading that BWA now recommends use of minimap2, and that minimap2 was much faster, more accurate and produces a better alignment, obviously I could not stop with the BAM file I had.

I went back to Ubuntu and ran the following:

sudo apt install minimap2

but I got the message:

I found out it required a newer version of Ubuntu than Windows had supplied in their store. So I followed the instructions: How to Upgrade Ubuntu 18.04 to 19.10 on Windows 10 Linux subsystem by Sarbasish Basu. Then I was able to install and run minimap2.

minimap2 –ax map-ont –t 4 GRCh37.p13.genome.fa.gz MyFile.fastq.gz | samtools sort -@4 -o FinalBAM.bam

where the ax parameter “map-ont” is for Oxford Nanopore long noisy reads.

I ran this. It gave little feedback. After about 6 hours, it told me it mapped 500000 sequences. Then another 6 hours and it mapped another 500000 sequences. It wouldn’t have been so bad if minimap2 was as resource friendly as BWA, but I found it sometimes noticeably impacted my working on my computer. I could still do things, but much slower than normally. What would normally be instantaneous would sometimes take 3 to 30 seconds – to open a browser, my email, etc.

None-the-less, I let it go for 3 days (72 hours) and then canned the program because I needed my computer back. Afterwards, I calculated that there likely are about 20 million long read sequences in my file. Extrapolating time-wise, that would have been about 10 days of running to complete.

YSeq

A few weeks later, in the Genetic Genealogy Tips & Techniques Facebook group, Blaine Bettinger posted regarding his Dante WGS test that he took in November and said that he purchased the “FASTQ Mapping to hg38” from YSeq for $25 and recommended that service.

Since minimap2 didn’t look like it was going to work for me on my computer, I thought using MSeq sounded like a good idea. Since I’m interested in DNA for cousin matching purposes, all the big consumer DNA companies are using Build 37 (i.e. hg19), so I decided to all purchase the “FASTQ Mapping to hg19” from YSeq for an additional $25.

I gave them access to my long read FASTQ files at Dante by setting up a temporary password for them. After about 4 weeks, my results were ready.

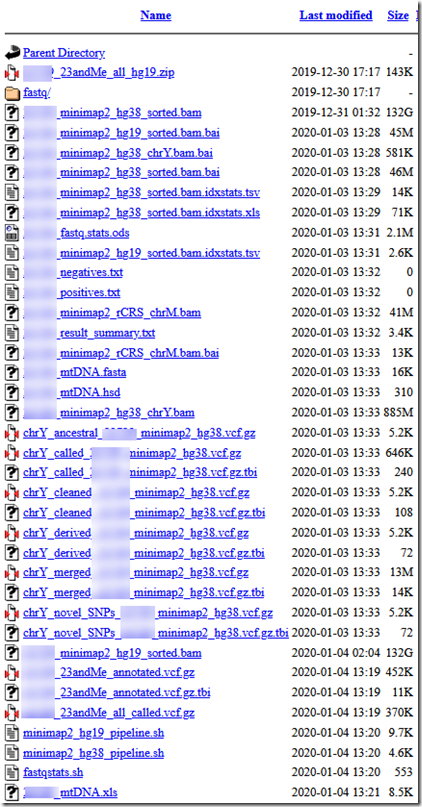

You can see they had a whole bunch of result files for me:

They used the minimap2 program. The files are for both hg19 and hg 38. The minimap2_hg38_sorted.bam file is 132 GB and the minimap2_hg19_sorted.bam file is the same size 132 GB.

There’s also a bunch of Y-DNA results and M (mt) DNA results along with various stats. There’s also a few files with 23andMe in the name that contain my variants in 23andMe’s raw data format. The pipeline files show me exactly what commands were run and I appreciate having those.

YSeq’s email to me telling me my results were ready included my BAM coverage figures: 38.8x for hg38 and 38.4x for hg19, so I achieved more than the 30x coverage that Dante promised. The average read was 5999 bases. That are much longer than a short read WGS test that typically averages 100 bases per read. I don’t have stats on what the N50 was (see earlier in the article for a definition of N50), but Dante promises at least 20,000 and I trust that I got that.

YSeg’s email also gave me my mt haplogroup: K1a1b1a, which is the same as what my Family Tree DNA mtDNA test gave me. And their Y-haplogroup path was the same as my Big Y-500 test at Family Tree DNA. YSeq ended with: R-Y2630 –> R-YP4538 compared to FTDNA: R-Y2630 –> R-BY24982 –> R-BY24978.

YSeq provides BAM files just for mt and Y which is convenient for uploading to services such as YFull. Personally, I’m not that interested in Y and mt because, other than my uncle, none of my matches are close enough to connect on my family tree. I have provided my Y-DNA to the Ashkenazi Levite DNA study and I’ve let them do the tough stuff with it.

Each of the two 132 GB BAM files took me about 22 hours to download at an average download speed of 1.7 MB/second.

So What the Heck Do I Plan To Do With These BAMs?

I’ve now got BAM files that were produced from:

- My short read WGS produced by Dante using BWA-MEM.

- My long read WGS produced by me using BWA-MEM.

- My long read WGS produced by YSeq using minimap2.

Other than scientific curiosity and an interest in learning, I’m mostly interested in autosomal DNA matching for genealogy purposes. I have two goals for these BAMs:

1. To compare the BAMs from my short read and long read WGS test with each other and to the raw data from the 5 SNP-based DNA tests I took. I want to see if from that I can determine error rates in each of the tests and see if I can correct the combined raw data file that I now use for matching at GEDmatch.

2. To see how much of my own DNA I might be able to phase into my two parents. This will be a long term goal. Reads need to contain at least two heterozygous (different value for both parents) SNPs in order to connect each end of them to the next read of the same parent’s chromosome. And there are some very long regions of homozygous (same value for both parents) SNPs. WGS long reads are generally not long enough to span all them. But I’d still like to see how many long segments can be phased.

All this will happen when the time is right, and if I ever get some time.

Joined: Sun, 16 Feb 2020

2 blog comments, 0 forum posts

Posted: Sun, 16 Feb 2020

I’ve never used Linux. What is the command syntax for telling Ubuntu how to locate the files? I have them on a different hard drive from my boot drive.

Joined: Sun, 16 Feb 2020

2 blog comments, 0 forum posts

Posted: Sun, 16 Feb 2020

I figured out what I needed for my setup was cd /mnt/g/Dropbox/DNA