There’s another website I recommend you upload your DNA raw data to called Borland Genetics.

See this video: Introducing Borland Genetics Web Tools

In a way, Borland Genetics is similar to GEDmatch in that they accept uploads of raw data and don’t do their own testing. Once uploaded, you can then see who you match to and other information about your match. Borland Genetics has a non-graphic chromosome browser that lists your segment matches in detail.

But Borland Genetics has a somewhat different focus from all the other match sites. This site is geared to help you reconstruct the DNA of your ancestors and includes many tools to help you do so. And you can search for matches of your reconstructed relatives, and your reconstructed relatives will also show up in the match lists of other people.

Once you upload your raw data and the raw data from some tests done by a few of your relatives, you’re ready to use the exotically named tools that include:

- Ultimate Phaser

- Extract Segments

- Missing Parent

- Two-Parent Phase

- Phoenix (partially reconstructs a parent using raw data of a child and relatives on that parent’s side)

- Darkside (partially reconstructs a parent using raw data of a child and relatives that are not on that parent’s side)

- Reverse Phase (partially reconstructs grandparents using a parent, a child, and a “phase map” from DNA Painter)

Coming soon is the ominously named: Creeper, that will be guided by an Expert System that use a bodiless computerized voice to instruct you what your next steps should be.

There’s also the Humpty Dumpty merge utility that can combine multiple sets of raw data for the same person, and a few other tools.

The above tools are all free at Borland Genetics and there’s a few additional premium tools available with a subscription. You can use them to create DNA kits for your relatives. Then you can then download them if you want to analyze them yourself or upload them to other sites that allow uploads of constructed raw data.

By comparison, GEDmatch has only two tools for ancestor reconstruction. One called Lazarus and one called My Evil Twin. Both tools are part of GEDmatch Tier 1, so you need a subscription to use them. Also, you can only use the results on GEDmatch, because GEDmatch does not allow you to download raw data.

Kevin Borland

The mastermind behind this site is Kevin Borland. Kevin started building the tools he needed for himself for his own genetic genealogy research a few years ago and then decided, since there wasn’t one already, to build a site for DNA reconstruction. See this delightful Linda Kvist interview of Kevin from Apr 16, 2020.

In March 2020, Kevin formally created Borland Genetics Inc.and partnered with two others to ensure that this work would continue forward.

If you are a fan of the BYU TV show Relative Race (and if you are a genealogist, you should be), then you should know that Kevin was the first relative visited by team Green in Season 2. See him at the end of Season 2 Episode 1 starting about 32:24.

Creating Relatives

I have not been as manic as many genetic genealogists in getting relatives to test. I only have my own DNA and my uncle (my father’s brother) who I have tested. So with only two sets of raw data, what can I do with that at Borland Genetics?

Well, first I uploaded and created profiles for myself and my uncle.

The database is still very small, currently sitting at about 2500 kits. Not counting my uncle, I have 207 matches with the largest being 54 cM. My uncle has 86 matches with the largest being 51 cM. This is interesting because most sites have more matches for my uncle than for me, since he is 1 generation further back. I don’t know any of the people either of us match with. None of them are likely to be any closer than 4th cousins.

My uncle and I share 1805.7 cM. The chromosome browser indicates we have no FIR (fully identical regions) so it’s very likely that despite endogamy, I’m only matching my uncle on my father’s side.

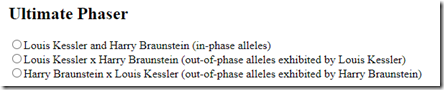

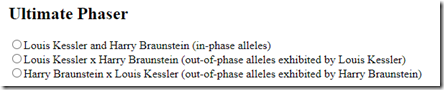

The chromosome browser suggest three Ultimate Phaser options for me to try:

To interpret the results of these, you sort of have to know what you’re doing.

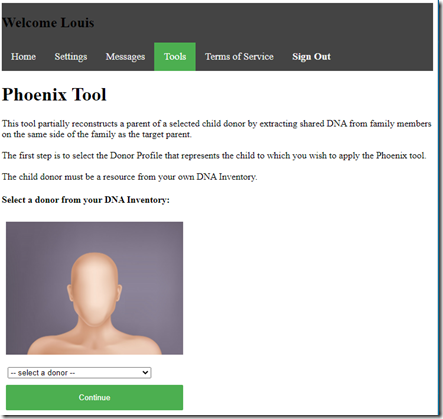

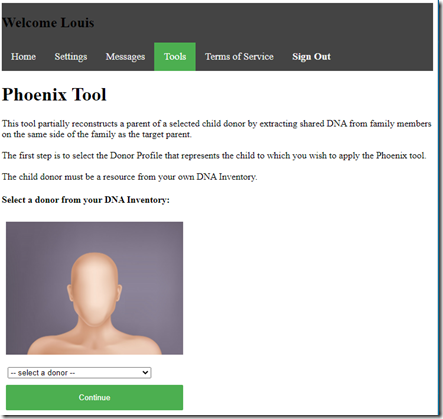

So let me go instead to try create some relatives. For that I can first use the Phoenix tool.

It allows me to select either myself or my uncle as the donor. I select myself as the donor and press Continue.

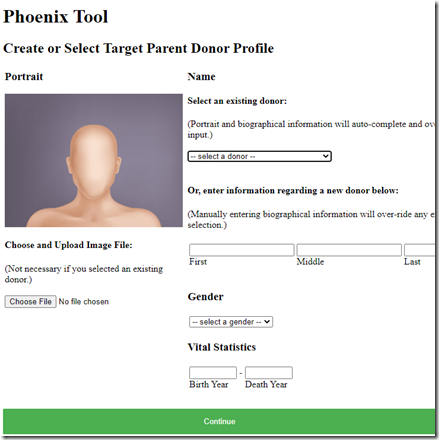

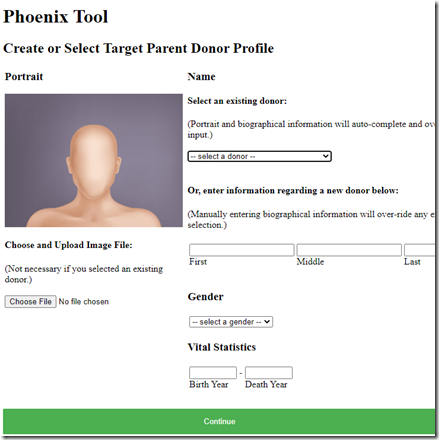

Here I enter information for my father and press Continue

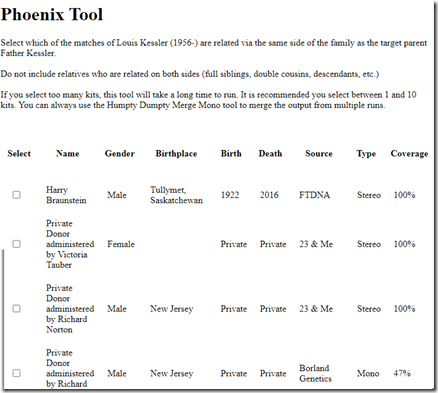

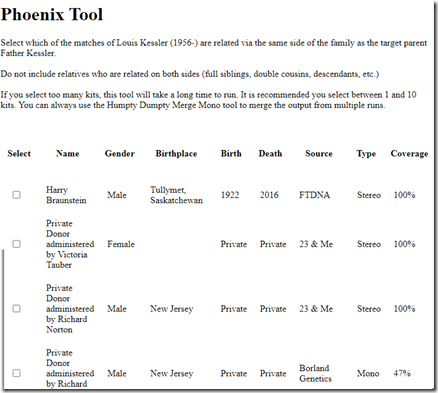

I now can select all my matches who I know are related on my father’s side. You’ll notice the fourth entry lists the “Source” as “Borland Genetics” which means it is a kit the person created, likely of a relative who never tested anywhere.

In my case, my uncle is the only one I know to be on my father’s side, so I select just him. I then scroll all the way down to the bottom of my match list to press Continue.

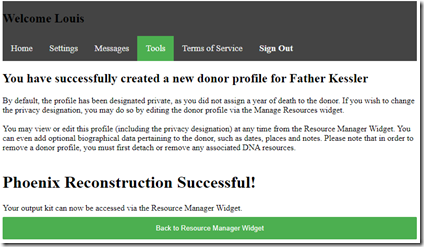

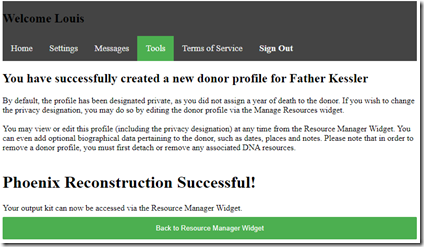

And while I’m waiting, I can click play to listen to some of Kevin’s music. After only about 2 minutes (the time was a big overestimate) the music stopped and I was presented with:

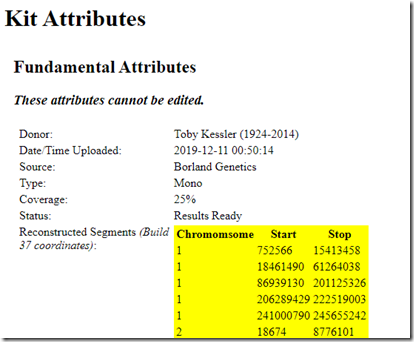

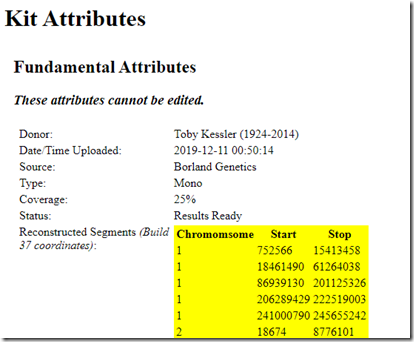

I now can go to my father’s kit and see what was created for him. His kit type is listed as “Mono” because only one allele (my paternal chromosome) can be determined. The Coverage is listed as 25% because I used his full brother who shares 50% with him, and thus 25% with me.

His match list will populate as if he was a person who had tested himself.

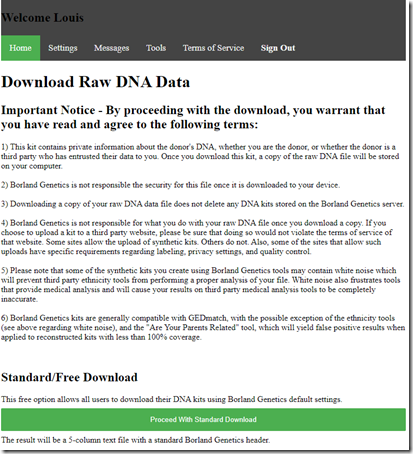

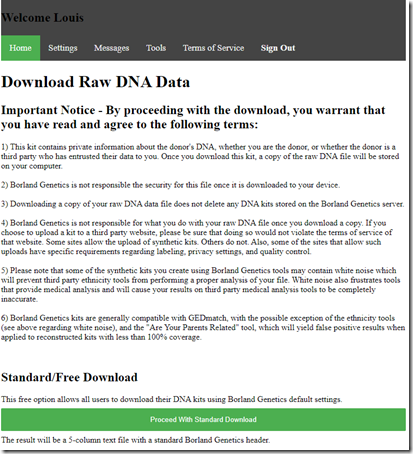

I can download my father’s kit:

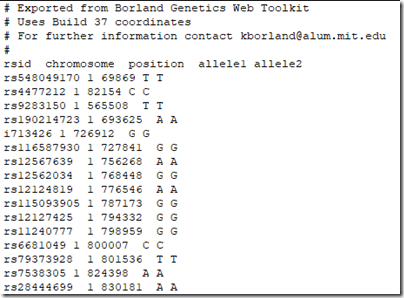

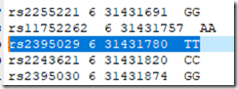

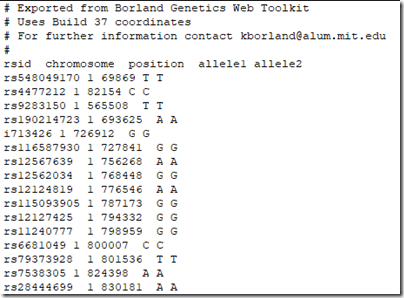

which gives me a text file with the results at every base pair:

The pairs of values are all the same because this is a mono kit. Also be sure to use only those SNPs within the reconstructed segments list. There must be an option somewhere to just download the reconstructed segments, but I can’t see it. (Kevin??)

In a very similar manner (which I won’t show here because it is, well, similar), I can use the Darkside tool to create a kit for my Mother using myself as the child and my Uncle as the family member on the opposite side of the tree.

Reconstructing Ancestral Bits

Now I have kits for myself, my uncle, my father and my mother. Can I do anything else?

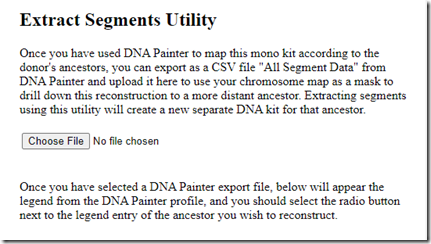

Well yes! I can use my analysis from DNA Painter to define my segments by ancestor.

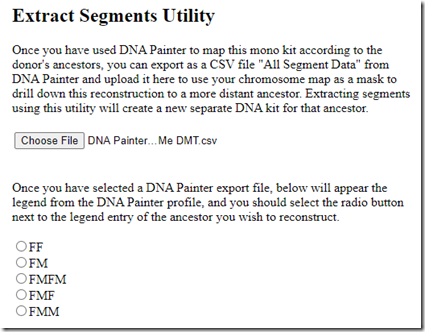

I just happened to have the DNA Painter analysis done already, which I used Double Match Triangulator for. Using DMT, I created a DNA Painter file from my 23andMe data for just my father’s side:

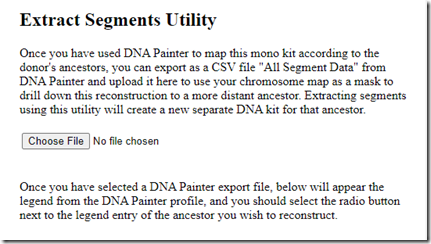

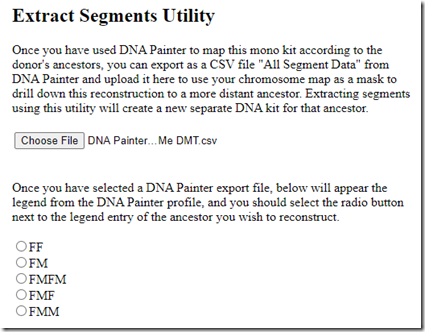

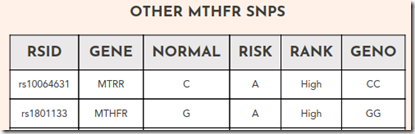

I labelled them based on the ancestor I identified, e.g. FMM = my father’s mother’s mother. I downloaded the segments from DNA Painter and clicked “Choose File” in Borland Genetics and it gave me my 5 ancestors with the same labeling to choose from.

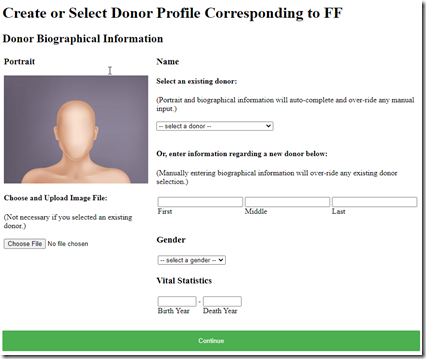

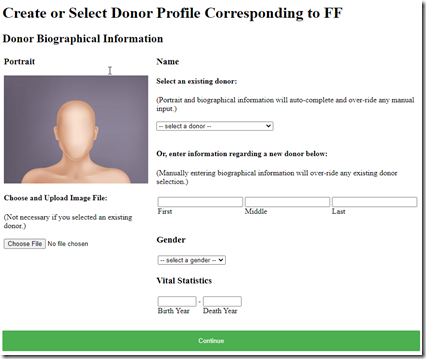

I select “FF”, click on “Extract Selected Segments” and up comes a screen to create a Donor Profile for my paternal grandfather!

Wowzers! I have now just created a DNA profile for a long-dead ancestor, and I can do the same for 4 more of my ancestors on my father’s side.

Just a couple of days ago, I think I was asking Kevin for this type of analysis. Only today when writing this post, did I see that he already had it.

Summary

I only have my own and my uncle’s raw data to work with, yet I can still do quite a bit. For people who have parents, siblings and dozens of others tested … well I’m enviously drooling at the thought of what you can do at Borland Genetics with all that.

There is a lot more to the Borland Genetics site than I have discussed here. There are projects you can create or join. Family tree information. Links to WikiTree. You can send messages to other users. There are advanced utilities you can get through subscription.

The site is still under development and Kevin is regularly adding to it. Kevin started a Borland Genetics channel on YouTube, and over the past 2 years he made an excellent 20 episode series of You Tube videos on Applied Genetics. And he runs the Borland Genetics Users Group on Facebook, now with 738 members. – I don’t know how he finds the time.

So now, go and upload your raw data kits to Borland Genetics, help build up their database of matches, and try out all the neat analysis it can do for you.

Feedspot 100 Best Genealogy Blogs

Feedspot 100 Best Genealogy Blogs